The Essentials

Data quality measures how well data serves its intended purpose. High-quality data is accurate, complete, consistent, timely, and trustworthy. Poor-quality data leads to bad decisions, wasted effort, and eroded trust.

Quality isn’t binary - data can be perfect for one use case and useless for another. A dataset with 95% accuracy might be fine for marketing analysis but unacceptable for financial reporting.

The goal isn’t perfect data. It’s data that’s good enough for how you’re using it, with visibility into its limitations.

The four core dimensions of data quality

Why Data Quality Matters

Decision Quality

Bad data leads to bad decisions. If your customer churn numbers are wrong, your retention strategy will be wrong. If revenue figures don’t reconcile, forecasts are unreliable. Garbage in, garbage out - at business scale.

Trust

Once stakeholders lose trust in data, they stop using it. They go back to gut feel, spreadsheets, and asking around. Rebuilding trust takes longer than building it initially.

Efficiency

Poor quality creates rework. Analysts spend time cleaning data instead of analyzing it. Engineers debug data issues instead of building features. Support handles complaints about incorrect reports.

Compliance

Regulations require accurate data. GDPR mandates accurate personal data. SOX requires reliable financial reporting. Healthcare regulations demand accurate patient records. Quality failures become compliance failures.

Cost

Bad data has direct costs: incorrect invoices, missed opportunities, regulatory fines. It has indirect costs too: time spent fixing problems, delayed projects, lost credibility.

Data Quality Dimensions

Accuracy

Does data reflect reality?

- Customer addresses that actually exist

- Order amounts that match what was charged

- Inventory counts that match physical stock

Accuracy is the most fundamental dimension. Inaccurate data is wrong data.

Completeness

Is all required data present?

- No missing values where data should exist

- All expected records captured

- Required fields populated

Completeness means having the data you need, not necessarily having all possible data.

Consistency

Does data agree across systems and over time?

- Same customer, same email address everywhere

- Totals that match their components

- Historical data that doesn’t change unexpectedly

Inconsistency creates confusion and undermines trust.

Timeliness

Is data available when needed?

- Real-time dashboards updated in real-time

- Daily reports available at start of business

- Alerts triggered before issues escalate

Stale data is often useless data.

Validity

Does data conform to defined formats and rules?

- Dates in expected formats

- Values within expected ranges

- References that point to existing records

Invalid data breaks downstream processing and analysis.

Uniqueness

Is each entity represented once?

- No duplicate customer records

- No repeated transactions

- Clear identity resolution

Duplicates inflate metrics and complicate analysis.

Measuring Data Quality

Quality Metrics

Quantify quality across dimensions:

Accuracy Rate = Correct records / Total records

Completeness Rate = Non-null values / Expected values

Consistency Rate = Matching records across systems / Total records

Timeliness = Time from event to data availability

Validity Rate = Valid records / Total records

Uniqueness Rate = Unique records / Total records

Quality Score

Combine metrics into an overall quality score:

Quality Score = (w1 × Accuracy + w2 × Completeness + w3 × Consistency + ...) / Total Weight

Weight dimensions based on importance for your use case. Financial data might weight accuracy heavily. Marketing data might prioritize completeness.

Quality SLAs

Define acceptable quality levels:

- “Customer email addresses must be 99% valid”

- “Daily revenue figures must be available by 6 AM”

- “Order data must match source systems within 0.1%”

SLAs make quality expectations explicit and measurable.

Common Quality Problems

Missing Data

Symptoms: Null values, empty fields, incomplete records

Causes:

- Optional fields not enforced at source

- Integration failures losing records

- Schema mismatches dropping columns

Solutions:

- Add not-null constraints where appropriate

- Monitor for completeness drops

- Validate record counts across systems

Duplicate Data

Symptoms: Inflated counts, multiple records for same entity

Causes:

- Multiple data sources without deduplication

- Retry logic creating duplicate inserts

- Lack of unique constraints

Solutions:

- Implement deduplication logic

- Use idempotent processing

- Add unique constraints and indexes

Inconsistent Data

Symptoms: Same entity with different values across systems

Causes:

- No master data management

- Unsynchronized updates

- Different business rules in different systems

Solutions:

- Establish single source of truth

- Implement data synchronization

- Document and reconcile business rules

Stale Data

Symptoms: Reports showing old information, delayed alerts

Causes:

- Pipeline delays or failures

- Batch processing too infrequent

- Bottlenecks in data flow

Solutions:

- Monitor data freshness

- Alert on pipeline delays

- Optimize or parallelize processing

Invalid Data

Symptoms: Processing failures, incorrect calculations

Causes:

- No input validation

- Schema evolution without handling

- Edge cases not anticipated

Solutions:

- Validate data at ingestion

- Handle schema changes gracefully

- Test with edge cases

Data Quality Strategy

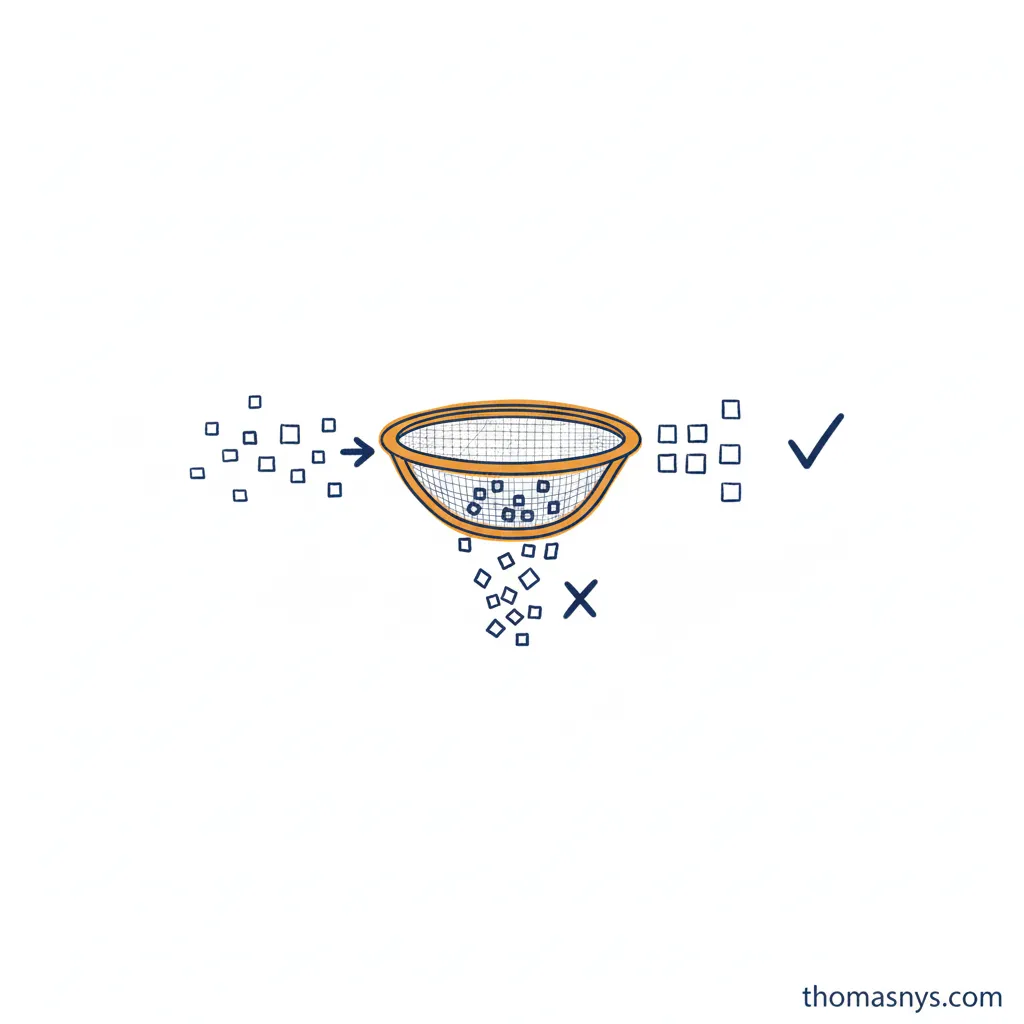

Prevention

Stop quality problems before they happen:

- Source validation - Reject bad data at the entry point

- Schema enforcement - Define and enforce data contracts

- Input constraints - Limit what values are acceptable

Prevention is cheaper than remediation.

Detection

Find problems quickly when they occur:

- Automated testing - Run quality checks on every pipeline run

- Anomaly detection - Alert on unexpected patterns

- Monitoring dashboards - Visibility into quality metrics

Early detection limits damage.

Correction

Fix problems when found:

- Automated remediation - Fix known issues automatically

- Manual review workflows - Route exceptions to humans

- Backfill processes - Correct historical data when needed

Have a process before you need it.

Communication

Keep stakeholders informed:

- Quality reports - Regular visibility into data health

- Incident communication - Alert consumers to known issues

- Documentation - Clear understanding of data limitations

Transparency builds trust even when problems occur.

Quality Testing

Schema Tests

Verify data structure:

-- Column exists and has correct type

SELECT column_name, data_type

FROM information_schema.columns

WHERE table_name = 'orders'

Null Tests

Check for unexpected nulls:

-- No null values in required field

SELECT COUNT(*)

FROM orders

WHERE customer_id IS NULL

Uniqueness Tests

Verify no duplicates:

-- Primary key is unique

SELECT order_id, COUNT(*)

FROM orders

GROUP BY order_id

HAVING COUNT(*) > 1

Referential Integrity Tests

Check foreign key relationships:

-- All orders have valid customers

SELECT COUNT(*)

FROM orders o

LEFT JOIN customers c ON o.customer_id = c.customer_id

WHERE c.customer_id IS NULL

Range Tests

Verify values within bounds:

-- Order amounts are positive

SELECT COUNT(*)

FROM orders

WHERE total_amount <= 0

Freshness Tests

Check data is current:

-- Data updated within expected window

SELECT MAX(updated_at)

FROM orders

WHERE updated_at < CURRENT_TIMESTAMP - INTERVAL '1 hour'

Tools for Data Quality

dbt Tests

Built-in testing for dbt projects:

# schema.yml

models:

- name: orders

columns:

- name: order_id

tests:

- unique

- not_null

- name: customer_id

tests:

- not_null

- relationships:

to: ref('customers')

field: customer_id

- name: total_amount

tests:

- not_null

- dbt_utils.accepted_range:

min_value: 0

Great Expectations

Python-based data validation:

import great_expectations as gx

context = gx.get_context()

validator = context.get_validator(

batch_request=batch_request,

expectation_suite_name="orders_suite"

)

validator.expect_column_values_to_not_be_null("customer_id")

validator.expect_column_values_to_be_between("total_amount", min_value=0)

validator.expect_column_values_to_be_unique("order_id")

Soda

SQL-based quality checks:

# checks.yml

checks for orders:

- row_count > 0

- missing_count(customer_id) = 0

- duplicate_count(order_id) = 0

- min(total_amount) >= 0

- freshness(created_at) < 1h

Monte Carlo, Anomalo, Bigeye

Commercial data observability platforms that provide:

- Automated anomaly detection

- Quality monitoring dashboards

- Incident management

- Root cause analysis

Quality and Governance

Data quality is a core pillar of data governance:

- Ownership - Someone must be accountable for each dataset’s quality

- Standards - Define what “good quality” means for your organization

- Processes - How quality issues are identified and resolved

- Metrics - How quality is measured and reported

Quality without governance is reactive firefighting. Governance without quality metrics is paperwork.

Getting Started

1. Define Critical Data Elements

Not all data needs the same quality attention. Identify the data that matters most - what drives key decisions, what has compliance requirements, what powers customer-facing products.

2. Establish Baselines

Measure current quality before trying to improve it. You can’t demonstrate progress without knowing where you started.

3. Implement Basic Tests

Start with simple tests on critical data:

- Not null on required fields

- Unique constraints on identifiers

- Freshness on time-sensitive data

4. Create Visibility

Build a quality dashboard. Make quality metrics visible to data producers and consumers.

5. Assign Ownership

Every dataset needs an owner accountable for quality. Quality without ownership is everyone’s problem and no one’s priority.

Frequently Asked Questions

What is data quality?

Why is data quality important?

How do you measure data quality?

What tools help with data quality?

How do I improve data quality?

Related Reading

Data Foundations

- What Is Data Governance? - The framework quality operates within

- What Is Data Lineage? - Tracing quality issues to their source

- What Is Data Integration? - Where quality problems often originate

- What Is a Data Platform? - Building quality into your platform

- What Is Data Architecture? - Designing for quality

AI & ML

- AI & Data Architecture - Why AI projects fail without quality data

- What Is MLOps? - Data quality in ML operations

Related Topics

- Building Data Teams - Teams accountable for quality

- What Is Technical Debt? - How quality issues create debt

- The Data Contract Pattern - Agreements that enforce quality

- Data Quality as Organizational Signal - How quality issues reveal organizational dysfunction

- Data Quality Crisis: 77% Admit Failure - Why quality is everyone’s problem

- The Dashboard Nobody Trusted - What happens when quality erodes trust

Last updated: 1 March 2026