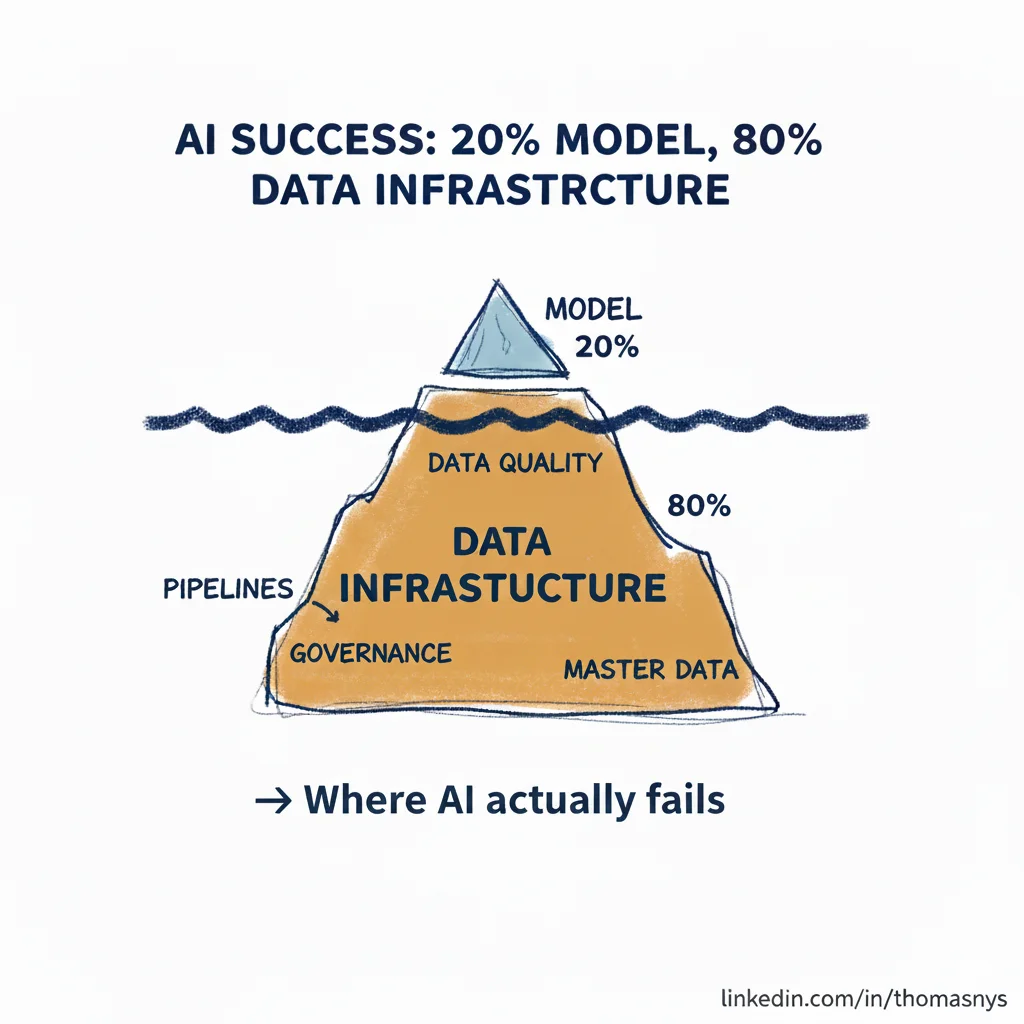

Rule of thumb: AI success is 20% model and 80% data infrastructure.

I’ve reviewed dozens of failed AI initiatives. The pattern is often the same.

The POC worked beautifully on clean, curated sample data. Then production happened.

The data wasn’t where they thought it was. Customer records split across many systems. Product data with three different schemas. No master data management.

The data wasn’t what they thought it was. Labels were inconsistent. Historical records incomplete. Business rules encoded in people’s heads, not systems.

The data wasn’t ready for scale. Batch pipelines that took 8 hours. No real-time feeds. No versioning for training data.

Many AI teams report spending around 80% of their time on data preparation. That’s not a failure of AI. It’s a failure of data infrastructure.

And here’s the painful part: surveys have found around 42% of data scientists say their results aren’t used by business decision makers. The models work. The trust doesn’t.

The organizations winning at AI aren’t the ones with better models. They’re the ones who fixed their data platform first. This is why solid data architecture - with clear ownership, integration patterns, and governance - is the prerequisite for AI success.

Before your next AI initiative, ask:

- Do we have a single source of truth for this domain?

- Can we access this data reliably at scale?

- Is the data quality sufficient for production decisions?

A platform review can assess whether your data foundation is ready for AI.

What’s the biggest data gap blocking your AI ambitions?