Testing data quality after transformation is like tasting the dish after it’s plated. Too late.

Most teams test data quality where they notice problems - in dashboards and reports. By then, bad data has propagated through your entire pipeline.

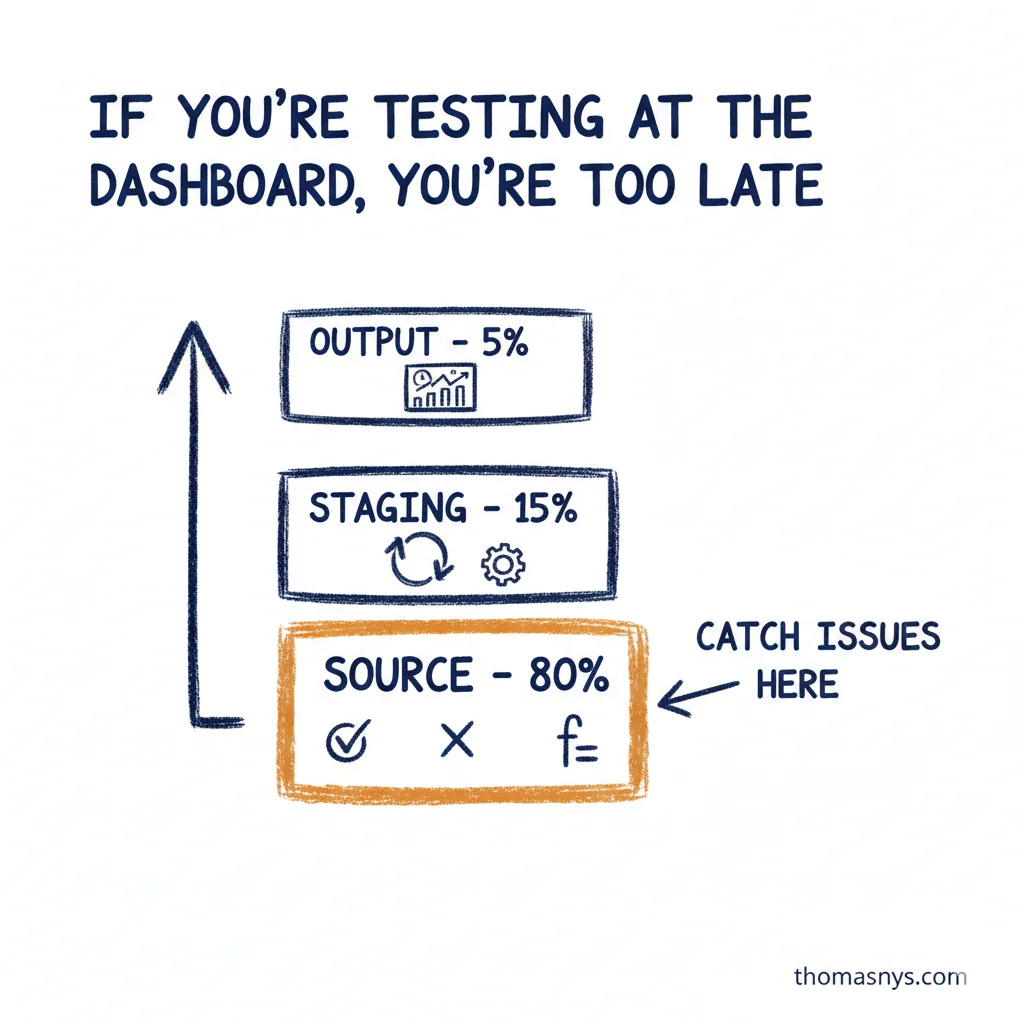

The testing hierarchy flips this:

Source layer (80% of tests): Schema validation, null checks, format rules. Catch issues where data enters your system.

Staging layer (15%): Logic-aware tests during transformations. Join integrity, aggregation accuracy.

Data mart/output (5%): Business rules and critical metrics. The executive dashboard number that can’t be wrong.

When you test primarily at source, bad data never makes it downstream. You’re not debugging production dashboards at 9pm because a vendor changed their schema.

dbt tests, Great Expectations, and Databricks DQX make this straightforward. The architecture principle matters more than the tool.

Where do most of your data quality tests run today? Source, staging, or output?