Global enterprises waste $100 billion annually on cloud. Your share is probably 20-40%.

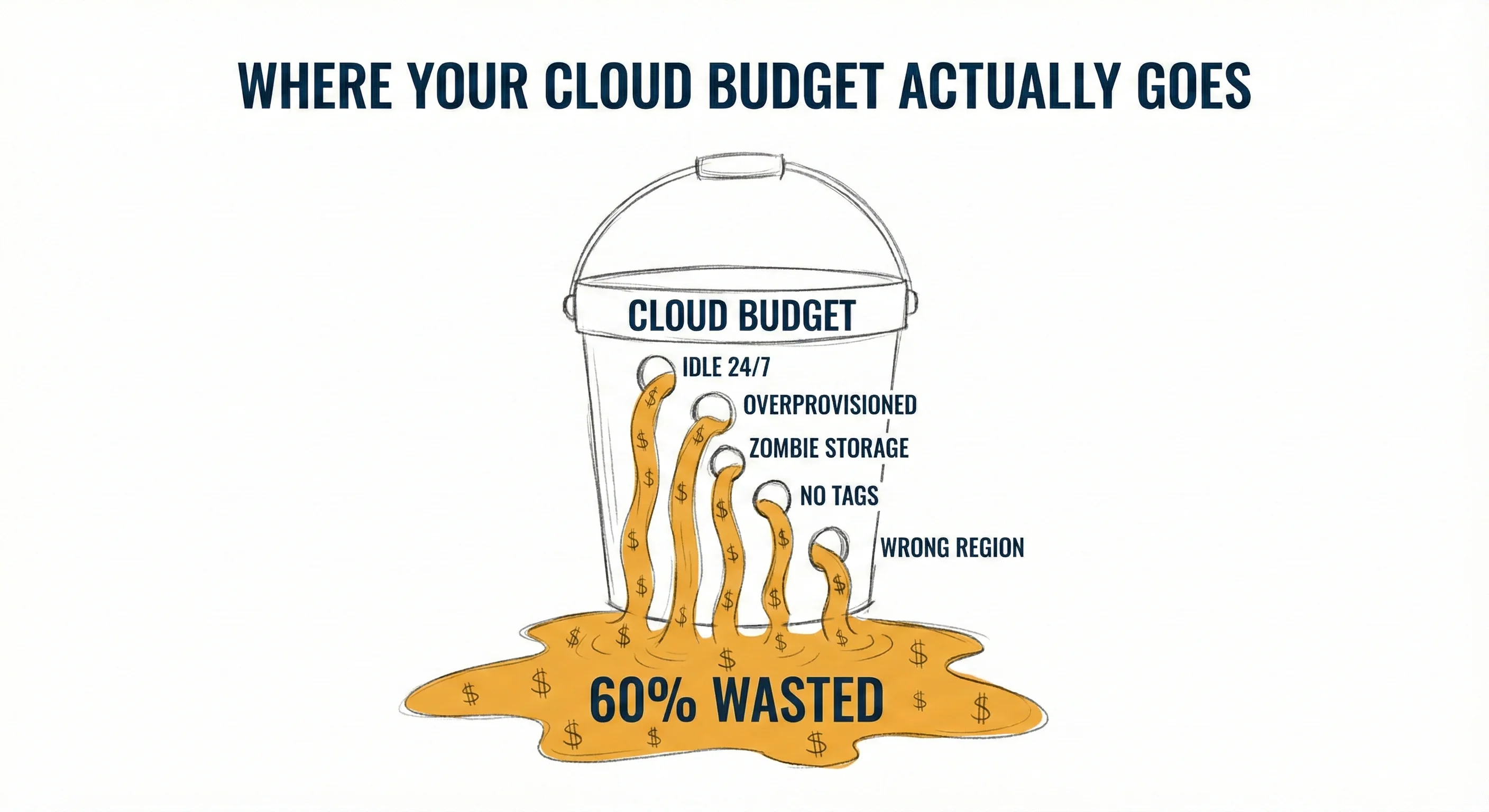

Gartner predicts that through 2025, over 60% of cloud spending will be wasted. Not “could be optimized” wasted. Gone. Paying for resources nobody uses.

Here’s how it happens:

Dev environments running 24/7 when they’re used 8 hours a day. Overprovisioned instances because right-sizing takes effort. Storage that grows but never shrinks. Reserved capacity that doesn’t match actual usage. No tagging, so nobody knows which team is spending what.

The cloud didn’t create this problem. It just made it invisible until the invoice arrived.

The good news: organizations can reduce cloud costs by 20-40% without sacrificing performance. Quick wins-rightsizing, scheduling, reserved instance optimization-deliver 10-20% savings in 60-90 days.

But here’s what most miss: cloud cost optimization isn’t an ops problem. It’s an architecture problem. Where do you place workloads? How do you design for elasticity? Whether your teams understand the cost of their decisions.

The companies controlling cloud costs in 2026 won’t be the ones with the best FinOps tools. They’ll be the ones where engineers see cost as a design constraint, not someone else’s problem.

What percentage of your cloud spend could you cut tomorrow without anyone noticing?